Generations Of Computer

The Evolution of Computers (Generations Explained):

Computers, as we know them today, did not happen overnight. In fact, they have dramatically evolved over the years with each generation of computer introducing leaps in terms of the technology, size, speed, and capabilities. Let's break them down simply in a human-friendly fashion.

First Generation

(1940s-1950s) Vacuum Tubes:

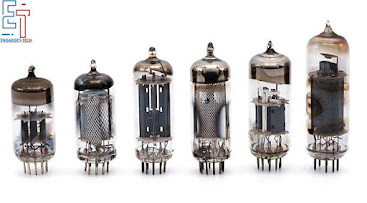

The first

generation of computers began in the late 1940s. These computers were

gargantuan; they filled entire rooms! They used vacuum tubes for calculations.

Vacuum tubes are small glass envelopes controlling electric currents but had a

major drawback-they tend to overheat and burn out easily, making the computers

unreliable.

An example of a first generation computer is the Electronic Numerical Integrator and Computer, also known as ENIAC. This kind of computer was used for military calculations. These were very slow today by standards and were used primarily for basic arithmetic operations.

Second Generation (1950s-1960s) Transistors:

By the end of

the 1950s, the Transistors made second-generation computers. Transistors are

semiconductor devices small enough to replace the large, unreliable vacuum

tubes, capable of amplifying or switching electrical signals. All of this made

the computers much smaller, faster, and less power-sucking.

A second-generation computer could do things at a much faster rate and much more efficiently. For instance, something that might have taken hours for the first generation to do may now take just a fraction of this time. Even though these were still relatively huge, they were much more stable and available for heavy-duty, industrial, and scientific use.

Third Generation

(1960-1970) Integrated Circuits:

It has been said that in the 1960s, a new breakthrough occurred in computers through the introduction of integrated circuits. These are small pieces of silicon that consist of a number of transistors packed together within it. This is how the computers of the third generation took on a much smaller and more powerful form than the previous types.

Now computers could process several tasks at a time and were put to use in many applications, including business and commercial areas. Companies like IBM began manufacturing computers for big corporations and small offices as well. This is the age when computers started becoming common in the usual working places of the world and not just the research laboratories.

Fourth Generation (1970s-Present) Microprocessors:

The Microprocessors in the early 1970s were the starting point of the fourth generation of computers. Actually, a microprocessor is the whole computer central processing unit (CPU) on a single chip. This advancement drastically reduced the size and cost of computers.

It's since then that PCs became possible through microprocessors and eventually to the computers that we use today. With the rise of companies like Apple and Microsoft in the 1980s, computers become more affordable and closer to the pockets of the general public; this is when the home computing boom took place.

In addition, fourth-generation computers presented the graphical user interface that made computers user-friendly. Users would no longer be required to type commands but rather to work with icons and windows, making it even easier to use computers.

Fifth Generation

(Present and Beyond) Artificial Intelligence:

The fifth generation is what we have nowadays, which emphasizes the high-level concept on AI and Machine Learning. Fifth-generation computers are based on technologies that allow them to process information in a way that mimics truly human thinking.

Think of systems like Siri, Alexa, or Google Assistant; these are great examples of how AI is being integrated into our daily devices. This computer is not another machine answering inputs but now learns from experience, predicts outcomes, and makes decisions.

Conclusion:

Computers went

from room-sized to slimmed-down smartphones. With each new generation, what's

possible is being edged forward, making computers smaller, faster, and more

powerful. Today, we are entering a new era with AI, quantum computing, and

machine learning setting the stage for the future of technology.

0 Comments